https://github.com/Kingo71/BerryConverter

Usage:

./berryboot_conv.sh <name_image_to_convert> <name_converted_image>

Example:

./berryboot_conv.sh raspios.img raspios_berry.imghttps://github.com/Kingo71/BerryConverter

Usage:

./berryboot_conv.sh <name_image_to_convert> <name_converted_image>

Example:

./berryboot_conv.sh raspios.img raspios_berry.imgsudo apt-get install breeze-icon-themeで解決

Missing button icons in EKOS under Ubuntu 15.10

https://indilib.org/forum/ekos/988-missing-button-icons-in-ekos-under-ubuntu-15-10/50577.html

Kstars Integration issue with Gnome desktop

https://indilib.org/forum/ekos/3679-kstars-integration-issue-with-gnome-desktop.html

How to enable screen sharing in MS Teams Desktop on Ubuntu 22.04

https://askubuntu.com/questions/1405195/how-to-share-a-screen-in-ms-teams-or-zoom-from-ubuntu-22-04

Ubuntu22.04でOBS-Studio ( Virtual cameraの問題解決)

Ubuntu22.04でOBS-Studioを使ってみたら問題が発生。VirtualCameraの機能を最初は問題なく起動するが、一旦、VirtualCameraを停止すると、次に開始ボタンをクリックしても起動しない。(OSをリブートすると、再び使えるようになる)

この問題を解消する情報がネット上にあったので、手順を踏んでOKとなった。

https://github.com/obsproject/obs-studio/issues/4808

For Ubuntu users coming here after a recent upgrade to 22.04 LTS who are now running into this issue, the temporary downgrade/workaround discussed above still works:

Grab v4l2loopback-dkms_0.12.5-1_all.deb from https://packages.debian.org/sid/all/v4l2loopback-dkms/download

Install it: sudo dpkg -i v4l2loopback-dkms_0.12.5-1_all.deb

Hold it back so system updates don't wipe it out: sudo apt-mark hold v4l2loopback-dkms

Remove the hold in the future when upstream gets fixed: sudo apt-mark unhold v4l2loopback-dkms

The last version of v4l2loopback that worked properly is 0.12.5-1 and that's what we're installing above.

(Ubuntu 21.04 includes 0.12.5-1ubuntu1 and Ubuntu 22.04 includes 0.12.5-1ubuntu5)

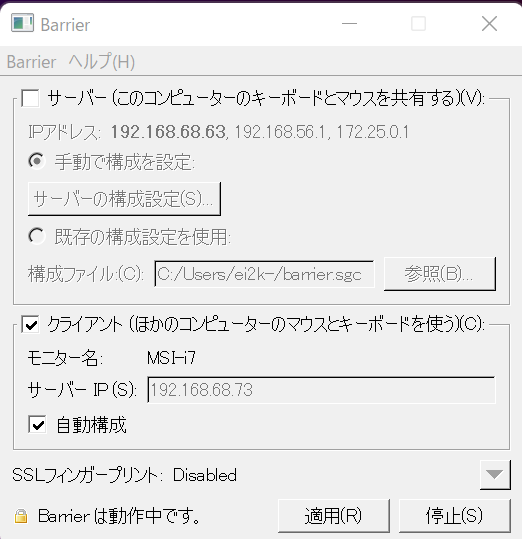

Windowsマシン同士なら、 Micurosoft純正(?)のMouse without Bordersが便利。

Linuxマシンを含む場合は、barrierを利用できることがわかりました。

Ubuntu20.04では apt install barrierで簡単に導入可能。

Windows11では、こちらからバイナリーをダウンロードできます。

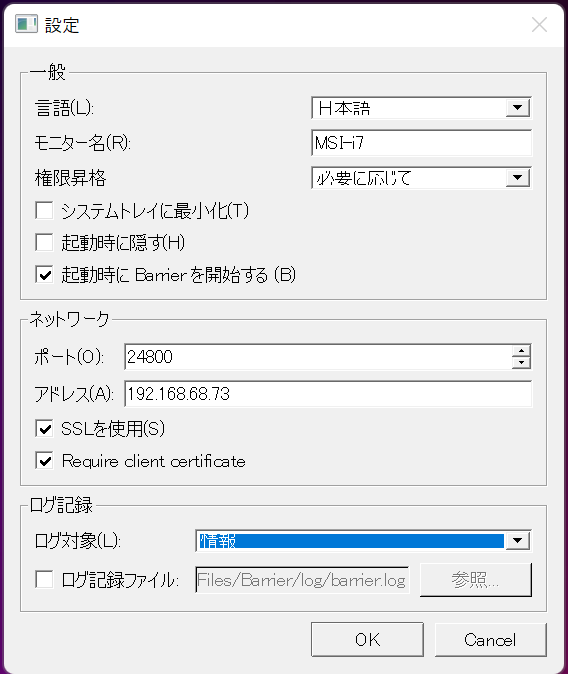

Linux側をサーバとして、Windowsをクライアントとする場合のWindowsの設定例

サーバーの自動起動設定

mkdir /.config/autostart

cp /usr/share/applications/barrier.desktop .config/autostart/.

Access JetBot by going to https://<jetbot_ip_address>:8888, navigate to ~/Notebooks/teleoperation/

Open teleoperation.ipynb file and following notebook

Connect USB adapter to PC

Go to https://html5gamepad.com, check the INDEX of Gamepad

Before you run the example, let's learn how the gamepad work.

The gamepad included supports two working modes. One is PC/PS3/Andorid mode and another is Xbox 360 mode.

The gamepad is set to PC/PS3/Andorid mode by default, in this mode, the gamepad has two sub-modes. You can press the HOME button to switch it. In Mode 1, the front panel lights on only one LED, the right joystick is mapped to buttons 0,1,2 and 3, and you can get only 0 or -1/1 value from the joysticks. In Mode 2, the front panel lights on two LEDs, the right joystick is mapped to axes[2] and axes[5]. In this mode, you can get No intermediate values from joysticks.

To switch between PC/PS3/Andorid mode and the Xbox 360 mode, you can long-press the HOME button for about 7s. In Xbox mode, the left joystick is mapped to axes[0] and axes[1], right joystick is mapped to axes[2] and axes[3]. This mode is exactly what the NVIDIA examples use. We recommend you to set your gamepad to this mode when you use it. Otherwise, you need to modify the codes.

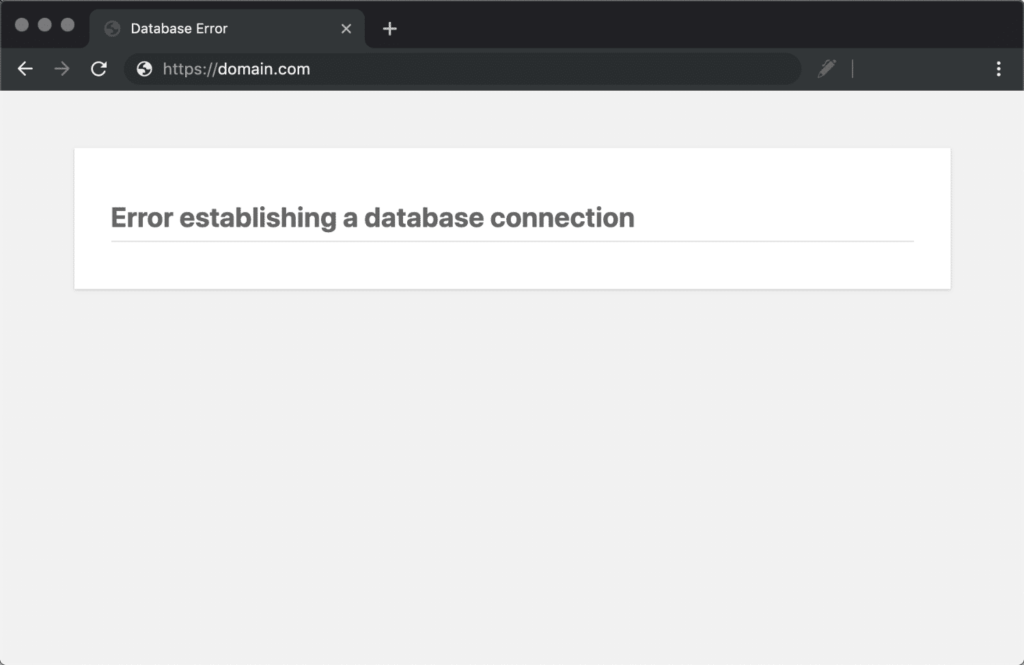

root@ps2:/var/lib/mysql# systemctl status mariadb

● mariadb.service - MariaDB 10.3.34 database server

Loaded: loaded (/lib/systemd/system/mariadb.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Fri 2022-04-01 11:02:34 JST; 10min ago

Docs: man:mysqld(8)

https://mariadb.com/kb/en/library/systemd/

Process: 575 ExecStartPre=/usr/bin/install -m 755 -o mysql -g root -d /var/run/mysqld (code=exited, status=0/SUCCESS)

Process: 600 ExecStartPre=/bin/sh -c systemctl unset-environment _WSREP_START_POSITION (code=exited, status=0/SUCCESS)

Process: 667 ExecStartPre=/bin/sh -c [ ! -e /usr/bin/galera_recovery ] && VAR= || VAR=`cd /usr/bin/..; /usr/bin/galera_recovery`; [ $? -eq 0 ] && systemctl s

Process: 823 ExecStart=/usr/sbin/mysqld $MYSQLD_OPTS $_WSREP_NEW_CLUSTER $_WSREP_START_POSITION (code=exited, status=1/FAILURE)

Main PID: 823 (code=exited, status=1/FAILURE)

Status: "MariaDB server is down"

4月 01 11:02:28 ps2 systemd[1]: Starting MariaDB 10.3.34 database server...

4月 01 11:02:31 ps2 mysqld[823]: 2022-04-01 11:02:31 0 [Note] /usr/sbin/mysqld (mysqld 10.3.34-MariaDB-0+deb10u1) starting as process 823 ...

4月 01 11:02:34 ps2 systemd[1]: mariadb.service: Main process exited, code=exited, status=1/FAILURE

4月 01 11:02:34 ps2 systemd[1]: mariadb.service: Failed with result 'exit-code'.

4月 01 11:02:34 ps2 systemd[1]: Failed to start MariaDB 10.3.34 database server.ログファイの肥大化などが原因になることもあるようだ。

oot@ps2:/var/log/mysql# find / -name "ib_logfile*" 2>/dev/null

/var/lib/mysql/ib_logfile0

/var/lib/mysql/ib_logfile1

^C

root@ps2:/var/log/mysql# cd /var/lib/mysql/

root@ps2:/var/lib/mysql# ls -l

合計 176180

-rw-rw---- 1 mysql mysql 16384 4月 1 11:02 aria_log.00000001

-rw-rw---- 1 mysql mysql 52 4月 1 11:02 aria_log_control

-rw-r--r-- 1 root root 0 3月 31 19:09 debian-10.3.flag

-rw-rw---- 1 mysql mysql 6174 3月 31 19:17 ib_buffer_pool

-rw-rw---- 1 mysql mysql 50331648 3月 31 19:17 ib_logfile0

-rw-rw---- 1 mysql mysql 50331648 3月 31 19:10 ib_logfile1

-rw-rw---- 1 mysql mysql 79691776 3月 31 19:17 ibdata1ib_logfile* を削除してみる。

root@ps2:/var/lib/mysql# rm -rvf ib_logfile*

'ib_logfile0' を削除しました

'ib_logfile1' を削除しましたmariadbを再起動したら、OKとなった!

root@ps2:/var/lib/mysql# systemctl restart mariadb

root@ps2:/var/lib/mysql# systemctl status mariadb

● mariadb.service - MariaDB 10.3.34 database server

Loaded: loaded (/lib/systemd/system/mariadb.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2022-04-01 11:12:50 JST; 30s ago

Docs: man:mysqld(8)

https://mariadb.com/kb/en/library/systemd/

Process: 4321 ExecStartPre=/usr/bin/install -m 755 -o mysql -g root -d /var/run/mysqld (code=exited, status=0/SUCCESS)

Process: 4322 ExecStartPre=/bin/sh -c systemctl unset-environment _WSREP_START_POSITION (code=exited, status=0/SUCCESS)

Process: 4324 ExecStartPre=/bin/sh -c [ ! -e /usr/bin/galera_recovery ] && VAR= || VAR=`cd /usr/bin/..; /usr/bin/galera_recovery`; [ $? -eq 0 ] && systemctl

Process: 4404 ExecStartPost=/bin/sh -c systemctl unset-environment _WSREP_START_POSITION (code=exited, status=0/SUCCESS)

Process: 4407 ExecStartPost=/etc/mysql/debian-start (code=exited, status=0/SUCCESS)

Main PID: 4372 (mysqld)

Status: "Taking your SQL requests now..."

Tasks: 31 (limit: 4915)

CGroup: /system.slice/mariadb.service

mq4372 /usr/sbin/mysqldプラグイン「All-in-One WP Migration」を利用してwordpressのバックアップを試してみました。エクスポートのメニューからバックアップされたのファイルのサイズは、400MB強でした(PCへダウンロード)。

手順:

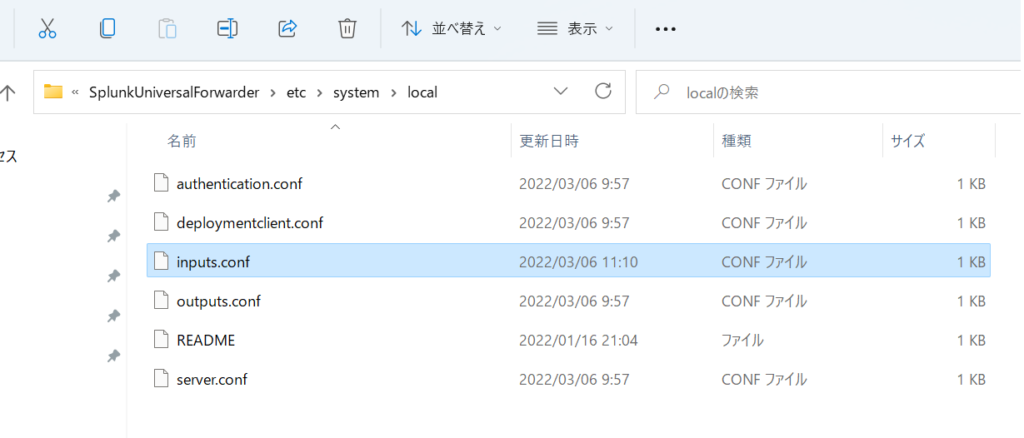

SplunkでWindowsのイベントログを閲覧できるまで設定が終わっていることを前提;

SplunkのAPP 「TA for Microsoft Windows Defender」をSplunkへ導入し、Windows側のUniversalForwarderのinputs.confに次を追加する。

[WinEventLog://Microsoft-Windows-Windows Defender/Operational]

disabled = false

blacklist = 1001, 1150, 2011, 2000, 2001, 2002, 2010

UniversalForwarderを再起動すると、Splunkのサーチでソースタイプとして”WinEventLog:Microsoft-Windows-Windows Defender/Operational”が見えるようになる。

Raspberry PiへUniversalForwarderをインストール

インストールの手順: https://www.splunk.com/ja_jp/blog/tips-and-tricks/how-to-install-universal-forwarder-01.html

DownloadのLINK: https://www.splunk.com/en_us/download/universal-forwarder.html

インデックスサーバへ転送するための設定:

indexerの設定(PORT 8089)

/opt/splunkforwarder/bin/splunk add forward-server <INDEXER_IP>:<INDEXER_PORT>モニター対象のファイル設定

How to install Splunk Forwarder on Ubuntu

例:$sudo splunk add monitor /var/log/apache2/access.log

モニター用のコマンド

[sudo] $SPLUNK_HOME/bin/splunk add monitor <取り込みファイルorディレクトリパス> [-パラメータ 値]OSの起動時にsplunkを起動する設定

/opt/splunkforwarder/bin/splunk enable boot-start