月のすぐ左に木星が輝いていたので撮影してみました。

月のすぐ左に木星が輝いていたので撮影してみました。

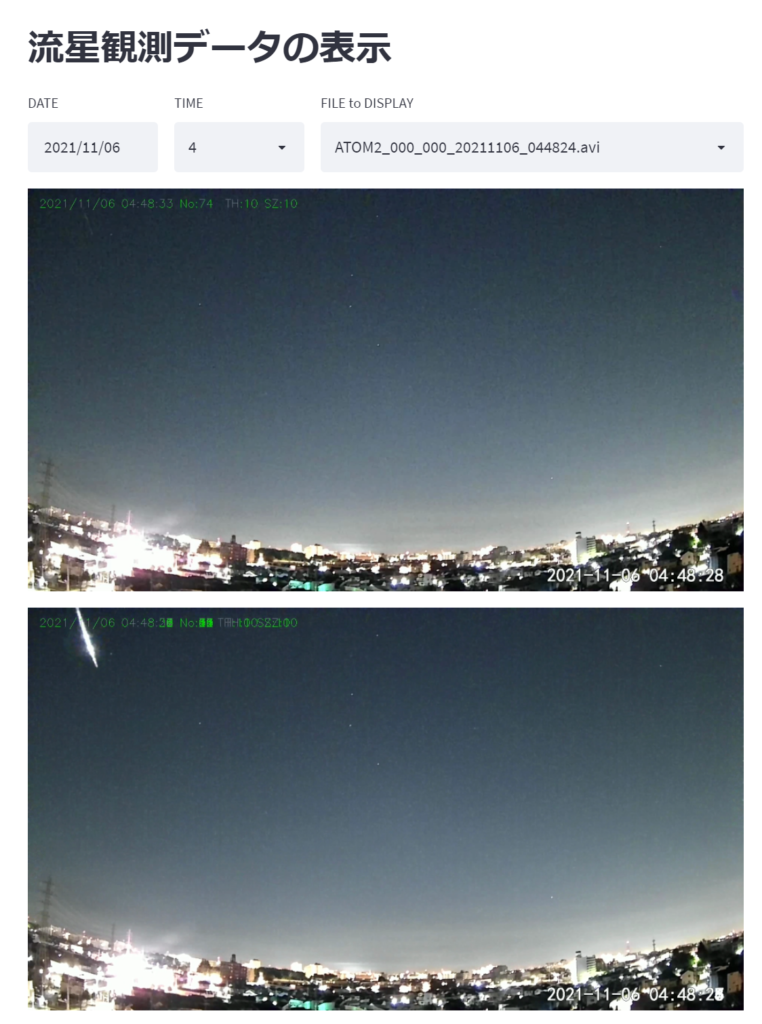

STEP-1 pythonスクリプト(detectMETEORa.py)でIPカメラから画像を取得し、動体検知のアルゴリズムを中心とした処理で、流星らしい動画(avi形式)を保存する。

STEP-2 記録した個々の動画をstreamlitスクリプト(play.py)でGUI操作で再生し、目視で流星と判断した動画について、COPYボタンをクリックして、1個のaviファイルから1枚のjpg画像を比較明合成アルゴリズムで生成し、同時にavi形式の動画からh264形式の動画(mp4形式)へ変換して保存する。

STEP-3 jpgファイルとmp4ファイルを、Webブラウザーでインタラクティブに閲覧できるよう、あらかじめ用意したフォルダーへCopyする。

ブラウザーで閲覧するためのphpコード ▶ボタンをクリックして、表示されている画像の動画を再生することが可能です。表示対象の画像を月単位で選択できます。

<?php

$day= new DateTime();

if (isset($_POST['month']) && ($_POST['month']!="")){

$month=$_POST['month'];

$f_month=str_replace("-","",$month);

} else {

$month="";

}

echo "<div>";

echo "<H2>動画を再生するには、各画像左下の再生ボタンをクリックして下さい。</H2>";

echo "<LI>画像上へマウスオーバーするとファイル名を表示します。<BR>";

echo "ファイル名[カメラ名_検知フレーム数_総フレーム数_YYYYMMDD_HHmmSS.mp4]<BR>";

echo "<form method =\"POST\">\n";

echo "<BR><LABEL Date>月を変更するには年月欄の右端をクリックしてください。</LABEL>";

echo "<input type=\"month\" name=\"month\" value=$month>";

echo "<input type =\"submit\" value =\"表示\">";

echo "</form></dev>";

//echo "$f_month<BR>\n";

$images = glob('meteor/COMP/*jpg');

$n=0;

foreach($images as $v) {

if (strpos($v,$f_month)) {

$tmp=explode(".",$v);

$mp4=$tmp[0].".mp4";

$mp4=str_replace("COMP","BEST2",$mp4);

//echo "$mp4<BR>";

$tmp=explode("/",$mp4);

$title=$tmp[2];

$msg="<video controls muted title=$title width='480' height='280' src=$mp4 poster=$v></video>";

echo $msg;

if ($n % 2 == 1){

echo "<BR>\n";

}

$n++;

}

}

?>新しく購入した望遠鏡で木星と土星を撮影してみた。

SkyWatcher MAK127SP マクストフカセグレン鏡筒(口径127mm,焦点距離1500mm,F12)

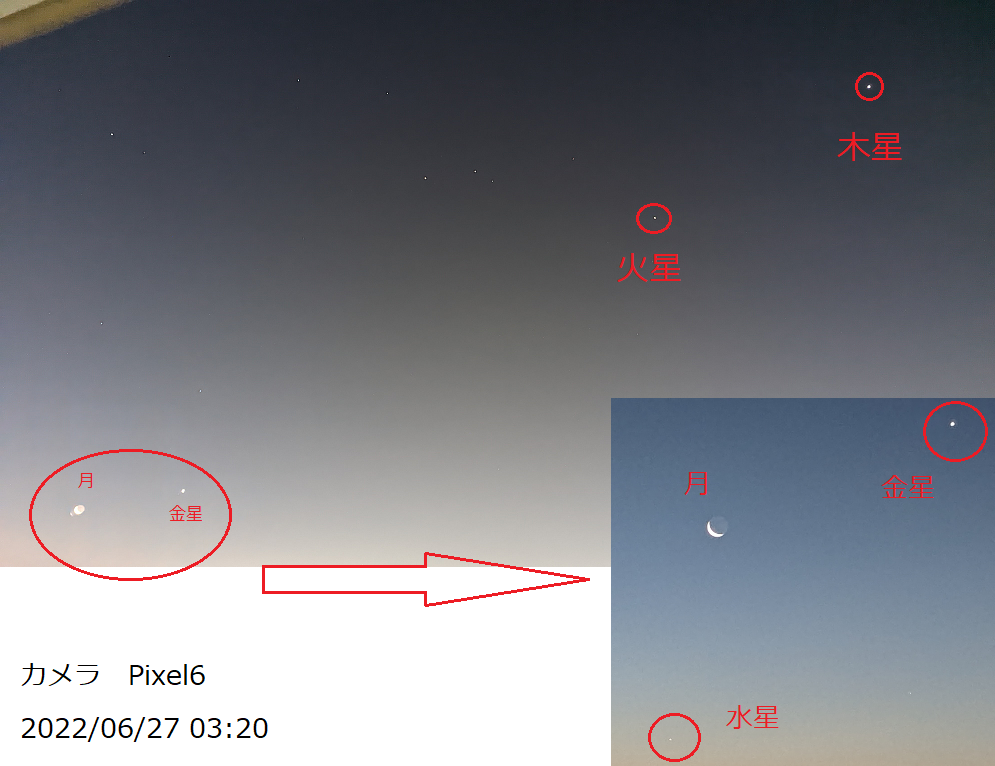

明け方の東の空に惑星が勢ぞろい(6月27日、3時20分頃)スマホ(Pixel6)で撮影してみた。

この画像では表現できていないが、デジカメCoolpix P950で倍率を上げて撮影したら、海王星、天王星も確認することができた。

流星観測のカメラに不思議な動きをする航跡(?)が記録されていた。(UFO??)

sudo apt-get install breeze-icon-themeで解決

Missing button icons in EKOS under Ubuntu 15.10

https://indilib.org/forum/ekos/988-missing-button-icons-in-ekos-under-ubuntu-15-10/50577.html

Kstars Integration issue with Gnome desktop

https://indilib.org/forum/ekos/3679-kstars-integration-issue-with-gnome-desktop.html

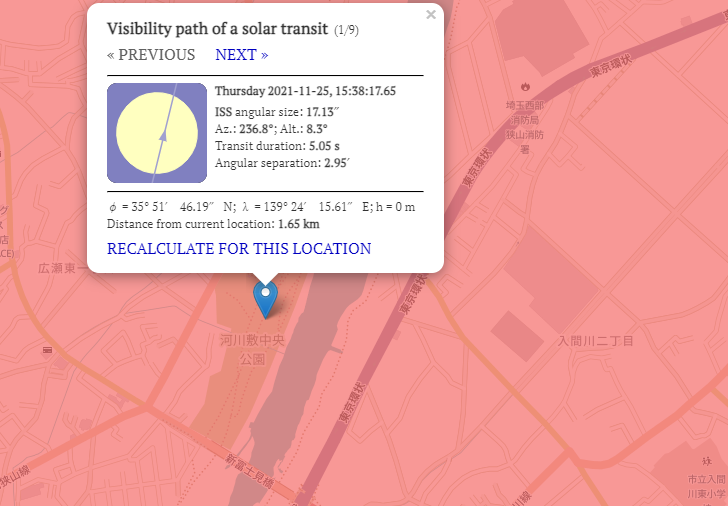

国際宇宙ステーションが、太陽や月面の前を通過する日時・場所を、ISS TRANSIT FINDERで知ることができます。

11月25日に、自宅から少し離れた河川敷公園で、 国際宇宙ステーションの太陽面の通過を観測できそうなので、器材一式(カメラ、小型赤道儀、三脚)を持って撮影にトライしてみました。 今回のタイミングでは、ISSまでの距離が1600Km以上と、かなり遠いためISSらしい機影までは、確認に至りませんでしたが、撮影までの一連の流れを確認できました。下の画面では縮小されて見ずらいので、youtubeで表示した方が見やすいと思います。ISSは、右下から左上方向に移動します。

課題

カメラの撮影パラメータの適切化(動画/静止画、Iシャッター速度、撮影モード(連写/高速連写など、、、)

撮影器材

おまけ:撮影の準備中に航空機が太陽面を通過する映像を記録できました。

# -*- coding: utf-8 -*-

import streamlit as st

import time

import datetime

import os

import glob

import cv2

import re

import numpy as np

from PIL import Image

from datetime import datetime, date, time

PATH='/home/metro//DATA/'

def comp_b2(A,B):

# 比較明合成処理

# https://nyanpyou.hatenablog.com/entry/2020/03/20/132937

#

gray_img1 = cv2.cvtColor(A, cv2.COLOR_BGR2GRAY)

gray_img2 = cv2.cvtColor(B, cv2.COLOR_BGR2GRAY)

#グレースケールの比較で作成したimg1用のマスク(img1の方が明るい画素を示す)

mask_img1 = np.where(gray_img1>gray_img2, 255, 0).astype(np.uint8)

#img2用のマスク(0と255を入れ替え)(img2の方が明るい画素を示す)

mask_img2 = np.where(mask_img1==255, 0, 255).astype(np.uint8)

#作成したマスクを使って元画像から抜き出し

masked_img1 = cv2.bitwise_and(A, A, mask=mask_img1)

masked_img2 = cv2.bitwise_and(B, B, mask=mask_img2)

img3 = masked_img1 + masked_img2

return img3

def disp(device):

n=0

cap = cv2.VideoCapture(device)

W = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

H = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

W2=int(W/2)

H2=int(H/2)

image_loc = st.empty()

prev=None

while cap.isOpened:

ret, img = cap.read()

if ret:

if W==1920:

img=cv2.resize(img, dsize=(W2, H2))

#time.sleep(0.01)

if prev is None:

prev = img.copy()

else:

E = comp_b2(prev,img)

prev = E

img = Image.fromarray(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

image_loc.image(img)

else:

break

cap.release()

image_loc = st.empty()

img = Image.fromarray(cv2.cvtColor(prev, cv2.COLOR_BGR2RGB))

image_loc.image(img)

#st.button('Replay')

def main():

st.header("流星観測データの表示")

col1, col2, col3 = st.columns([1,1,3])

with col1:

date=st.date_input('DATE')

path=PATH+date.strftime("%Y%m%d")

selected=[]

f_name=[]

TL=[]

if os.path.exists(path):

files=glob.glob(path+'/*avi')

# time filter : m[4] is time field.

if files is not(None):

for opt in files:

m=re.split('[_.]',opt)

TL.append(int(int(m[4])/10000))

TL=list(set(TL)) # sortして重複を削除

# 処理対象の時間帯を選択するセレクトBOXの表示

with col2:

selected_item = st.selectbox('TIME',TL)

selT = int(selected_item)

for opt in files:

m=re.split('[_.]',opt)

if len(m)>=4:

t = int(int(m[4])/10000)

#if not(t>60000 and t<180000):

if t==selT:

selected.append(opt)

if selected is not(None):

for name in selected:

f_name.append(name.rsplit('/',1)[1])

with col3:

option = st.selectbox('FILE to DISPLAY',f_name)

if option is not(None):

disp(path+'/'+option)

else:

st.write('No data exists!')

if __name__ == '__main__':

main()# -*- coding: utf-8 -*-

import streamlit as st

import time

import datetime

import os

import glob

import cv2

from PIL import Image

from datetime import datetime, date, time

PATH='/home/mars/pWork/DATA/'

def disp(device):

cap = cv2.VideoCapture(device)

image_loc = st.empty()

while cap.isOpened:

ret, img = cap.read()

if ret:

img = Image.fromarray(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

image_loc.image(img)

else:

break

cap.release()

st.button('Replay')

def main():

st.header("流星観測データの表示")

date=st.date_input('Select date')

path=PATH+date.strftime("%Y%m%d")

#st.write(path)

if os.path.exists(path):

files=glob.glob(path+'/*avi')

option = st.selectbox('Select file:',files)

disp(option)

else:

st.write('No data exists!')

if __name__ == '__main__':

main()ファイルの選択対象を、様々な条件で絞りこむコードを追加したい。