投稿者「mars」のアーカイブ

herokuのアカウントを作成してstreamlitをdeploy

Streamlitで作ったWebアプリをHerokuにデプロイする

curl https://cli-assets.heroku.com/install-ubuntu.sh | shM81&M82

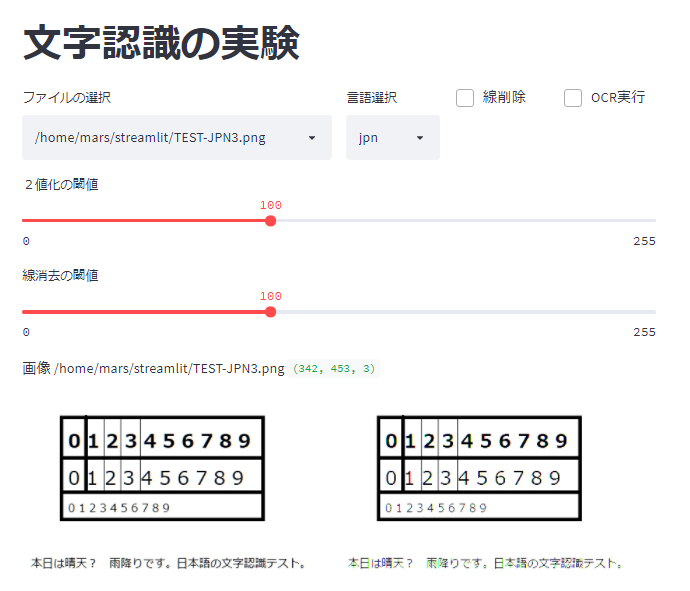

文字認識の条件設定をインタラクティブに

streamlitを利用して、ファイルの選択、言語設定、枠線削除のON/OFF、2値化と線消去の閾値をインタラクティブに設定できるようにしてみました。

表示する画像は、元画像と2値化した画像。線削除を選択すると、削除する線と元画像から線を削除した画像を表示します。

OCR実行をチェックすると、pytesseractで文字の認識を行います。文字認識の処理には時間がかかるので、閾値の設定中はチェックしないほうが良い。

処理結果の一例

pythonのコード

import streamlit as st

import cv2

from PIL import Image # 画像処理ライブラリ

from matplotlib import pyplot as plt # データプロット用ライブラリ

import numpy as np # データ分析用ライブラリ

import os # os の情報を扱うライブラリ

import pytesseract # tesseract の python 用ライブラリ

import glob

files=glob.glob("/home/mars/streamlit/*png")

#print(files)

def main():

st.title('文字認識の実験')

col1, col2 ,col3, col4 = st.columns([3,1,1,1])

with col1:

TGT = st.selectbox("ファイルの選択",files)

th2 = st.slider(label='2値化の閾値',min_value=0, max_value=255, value=100)

th1 = st.slider(label='線消去の閾値',min_value=0, max_value=255, value=100)

with col2:

LNG = st.selectbox("言語選択",['jpn','eng','number'])

with col3:

KEI = st.checkbox('線削除')

with col4:

OCR = st.checkbox('OCR実行')

img = cv2.imread(TGT)

#with pict[0]:

st.write('画像',TGT,img.shape)

#img = cv2.cvtColor(img, cv2.COLOR_RGBA2RGB)

ret, img_thresh = cv2.threshold(img, th2, 255, cv2.THRESH_BINARY)

im_h = cv2.hconcat([img, img_thresh])

st.image(im_h, caption='元画像')

if KEI:

img2 = img.copy()

img3 = img.copy()

gray = cv2.cvtColor(img_thresh, cv2.COLOR_BGR2GRAY)

gray_list = np.array(gray)

#img2.image(gray_list, caption='GRAY',use_column_width=True)

gray2 = cv2.bitwise_not(gray)

gray2_list = np.array(gray2)

lines = cv2.HoughLinesP(gray2, rho=1, theta=np.pi/360, threshold=th1, minLineLength=80, maxLineGap=5)

for line in lines:

x1, y1, x2, y2 = line[0]

# 緑色の線を引く

red_lines_img = cv2.line(img2, (x1,y1), (x2,y2), (0,255,0), 3)

red_lines_np=np.array( red_lines_img)

#cv2.imwrite("calendar_mod3.png", red_lines_img)

# 線を消す(白で線を引く)

no_lines_img = cv2.line(img_thresh, (x1,y1), (x2,y2), (255,255,255), 3)

no_lines=np.array( no_lines_img)

im_h = cv2.hconcat([red_lines_img, no_lines_img])

st.image(im_h,caption='No lines')

else:

no_lines=img_thresh

if OCR:

#txt = pytesseract.image_to_string(no_lines, lang="eng",config='--psm 11')

conf='-l ' + LNG + ' --psm6'

txt=pytesseract.image_to_string(no_lines, config=conf)

st.write(txt)

if __name__ == '__main__':

main()

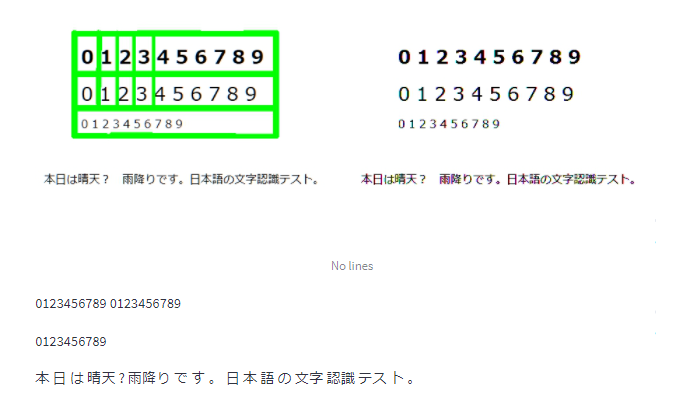

数独の問題から1文字づつ切り出して認識を試みる

import cv2

from PIL import Image # 画像処理ライブラリ

from matplotlib import pyplot as plt # データプロット用ライブラリ

import numpy as np # データ分析用ライブラリ

import os # os の情報を扱うライブラリ

import pytesseract # tesseract の python 用ライブラリ

import unicodedata

from pytesseract import Output

def remove_control_characters(s):

return "".join(ch for ch in s if unicodedata.category(ch)[0]!="C")

# 以下の値は汎用性ナシ。

dx,dy=52,52

ox,oy=10,10

tD,tB=38,38

pz='100006800500000030000720000942003706703004100805009000476392581251087360098165420'

img = cv2.imread("/home/mars/TEST50-2a.png")

#cv2.imshow('sudoku',img)

ok,ng=0,0

for x in range(9):

for y in range(9):

t=x*dx + ox

b=y*dy+oy

tt=t-2

bb=b-2

#cv2.rectangle(img,(t,b),(t+tD,b+tB),(0,0,255))

sliced=img[tt:t+tD,bb:b+tB]

#sliced=cv2.threshold(tmp, 150, 255, cv2.THRESH_BINARY)

im_list = np.array(sliced)

img_rgb = cv2.cvtColor(im_list, cv2.COLOR_BGR2RGB)

pos=str(x)+','+str(y)

#txt = pytesseract.image_to_string(im_list,lang="jpn",config='--psm 10 outputbase digits')

txt=pytesseract.image_to_string(sliced, config='-l eng --psm 6 outputbase digits')

junk=remove_control_characters(txt)

#print(x,y,len(junk))

ans=pz[x*9+y]

if len(junk)<1:

if ans=='0':

ok=ok+1

junk='O'

else:

junk='X'

ng=ng+1

else:

if ans==junk:

ok=ok+1

else:

junk='X'

ng=ng+1

out=out+junk+'('+ans+')'

#cv2.imshow('slice:'+pos,sliced)

print(str(x)+'--->',out,' correct=',ok,' in-correct=',ng)

out=''

#cv2.imshow('slice',img)

print('Success rate:',round(100*ok/81,2),'[%]')

print('done')正答率:86%

0---> 1(1)O(0)O(0)O(0)O(0)6(6)8(8)O(0)O(0) correct= 9 in-correct= 0

1---> X(5)O(0)O(0)O(0)X(0)O(0)O(0)3(3)O(0) correct= 16 in-correct= 2

2---> O(0)O(0)O(0)X(7)2(2)O(0)O(0)O(0)O(0) correct= 24 in-correct= 3

3---> 9(9)4(4)2(2)O(0)O(0)3(3)X(7)O(0)6(6) correct= 32 in-correct= 4

4---> 7(7)O(0)3(3)O(0)O(0)4(4)X(1)O(0)O(0) correct= 40 in-correct= 5

5---> 8(8)X(0)5(5)O(0)X(0)9(9)O(0)O(0)O(0) correct= 47 in-correct= 7

6---> 4(4)7(7)6(6)3(3)9(9)2(2)5(5)8(8)X(1) correct= 55 in-correct= 8

7---> X(2)5(5)X(1)O(0)8(8)7(7)3(3)6(6)O(0) correct= 62 in-correct= 10

8---> O(0)9(9)8(8)X(1)6(6)5(5)4(4)2(2)O(0) correct= 70 in-correct= 11

Success rate: 86.42 [%]

done

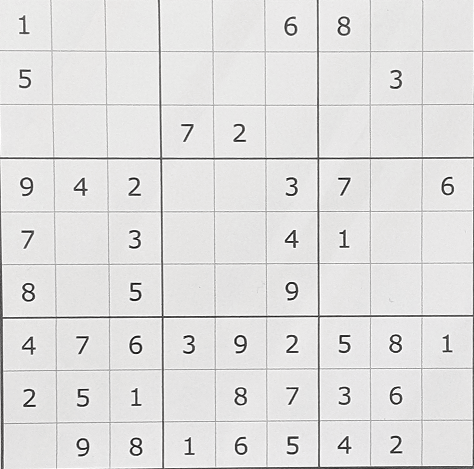

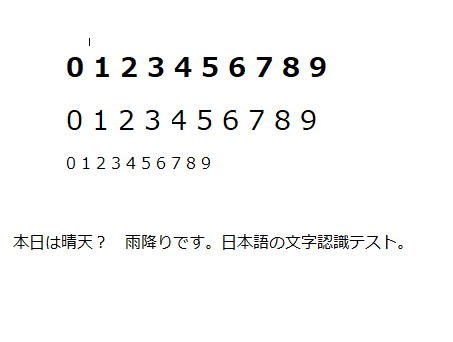

オープンソースの文字認識tesseractを試してみる(2)。カメラで撮影した画像の場合

認識率がかなり低下することがわかる。カメラで撮影する場合は、極力品質の良い画像となるよう工夫が必要。(特に、傾き、コントラストが問題となりそう)

結果:

0123456789 |

前 量 旧 |

0123456789 :

623456789 :

。 本 日 は 晴天 ? 雨降り で す 。 日 本 語 の 文字 認識 テス ト 。

認識結果:

Start....

Done.

UL

345675 9 リリ

| 0123456789 |

oo | 上

IIオープンソースの文字認識tesseractを試してみる。

環境

$ uname -a

Linux ps2 5.10.63-v7l+ #1457 SMP Tue Sep 28 11:26:14 BST 2021 armv7l GNU/Linux

$ cat /etc/os-release

PRETTY_NAME="Raspbian GNU/Linux 10 (buster)"

NAME="Raspbian GNU/Linux"

VERSION_ID="10"

VERSION="10 (buster)"

VERSION_CODENAME=buster

ID=raspbian

ID_LIKE=debianインストールの参考にしたサイト

「Raspberry Pi 3B+における、Tesseract(5.0.0 alpha)のインストール方法と基本操作」

$ sudo apt-get install tesseract-ocr-script-jpan

$ tesseract --version

tesseract 4.0.0

leptonica-1.76.0

libgif 5.1.4 : libjpeg 6b (libjpeg-turbo 1.5.2) : libpng 1.6.36 : libtiff 4.1.0 : zlib 1.2.11 : libwebp 0.6.1 : libopenjp2 2.3.0

$ tesseract --list-langs

List of available languages (3):

Japanese

eng

osd

日本語のトレーニングデータ取得とインストール(精度優先)

$ git clone https://github.com/tesseract-ocr/tessdata_best.git

$ sudo cp tessdata_best/jpn* /usr/share/tesseract-ocr/4.00/tessdata/

追加したトレーニングデータの確認

$ tesseract --list-langs

List of available languages (5):

Japanese

eng

jpn

jpn_vert

osd

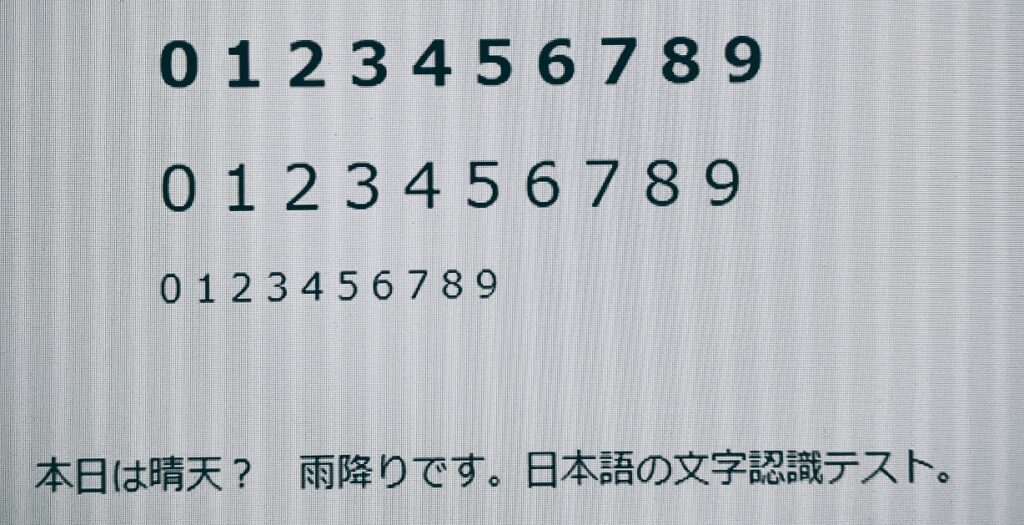

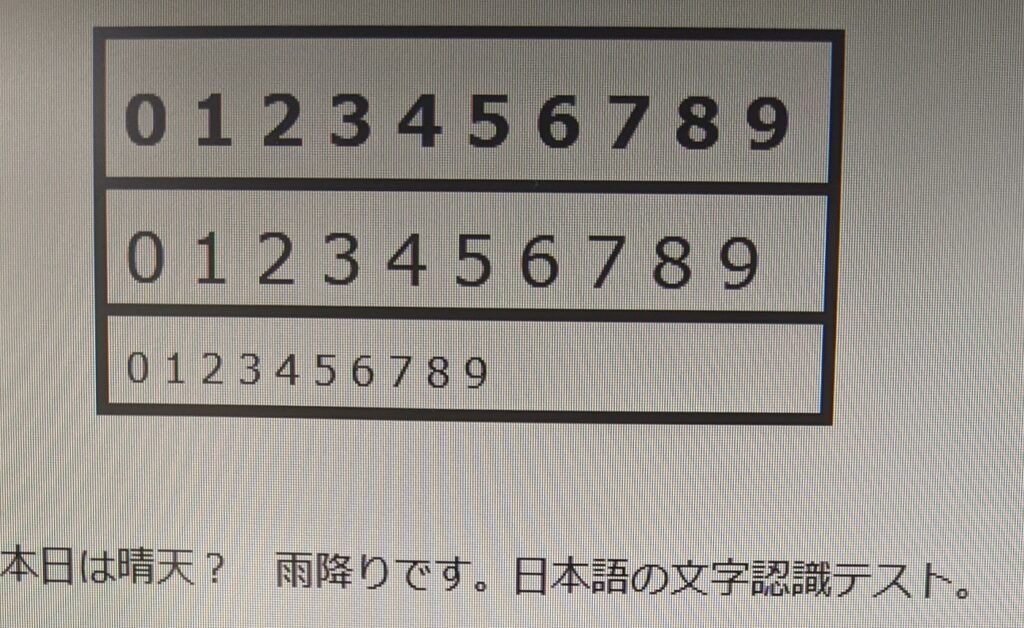

ペイントプログラムで書いたTEST-JPN.pngファイを認識させてみる。

フォントサイズ(20/12)と書体(BOLD)の違いも確認。

$ time tesseract TEST-JPN.png stdout -l jpn

0123456789

0123456789

0123456789

本 日 は 晴天 ? 雨降り で す 。 日 本 語 の 文字 認識 テス ト 。

real 1m4.283s

user 1m43.622s

sys 0m3.248sこの例では、なんと認識率100%。

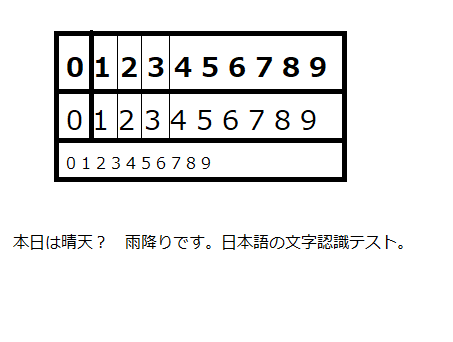

罫線の中の数字をも試してみました。(TEST-JPN3.png)

表組にすると、まったく認識されない結果に!

$ time tesseract TEST-JPN3.png stdout -l jpn

Empty page!!

Empty page!!

real 0m4.568s

user 0m2.977s

sys 0m1.382s表を認識させる記事があったので、こちらも試してみたが、pytesseract.image_to_stringを呼び出した後で、戻ってこない印象。 戻ってきていますが、空文字列となって、認識に失敗しているようです。前述のコマンドラインで認識できた画像は、pythonのコードでも問題なく認識。

「『表の文字』と『欄外の文字』の認識(Python + Tesseract)」

ここから、jupyter notebookで pytesseract をインストール

!pip install pytesseract

Looking in indexes: https://pypi.org/simple, https://www.piwheels.org/simple

Collecting pytesseract

Downloading https://www.piwheels.org/simple/pytesseract/pytesseract-0.3.8-py2.py3-none-any.whl (14 kB)

Requirement already satisfied: Pillow in /home/mars/.pyenv/versions/3.7.3/lib/python3.7/site-packages (from pytesseract) (8.3.1)

Installing collected packages: pytesseract

Successfully installed pytesseract-0.3.8 認識のテストコード

###############################################################################

# ライブラリインポート

###############################################################################

import os # os の情報を扱うライブラリ

import pytesseract # tesseract の python 用ライブラリ

from PIL import Image # 画像処理ライブラリ

import matplotlib.pyplot as plt # データプロット用ライブラリ

import numpy as np # データ分析用ライブラリ

img=Image.open('/home/mars/TEST-JPN3.png')

# 画像を配列に変換

im_list = np.array(img)

# データプロットライブラリに貼り付け

plt.imshow(im_list)

# 表示

plt.show()

print('Start....')

# テキスト抽出

txt = pytesseract.image_to_string(img,lang="jpn")

# 抽出したテキストの出力

print('Done.')

print(txt)

print()枠線を消す処理を追加

import cv2

from PIL import Image # 画像処理ライブラリ

from matplotlib import pyplot as plt # データプロット用ライブラリ

import numpy as np # データ分析用ライブラリ

import os # os の情報を扱うライブラリ

import pytesseract # tesseract の python 用ライブラリ

# 処理の対象

img = cv2.imread("/home/mars/TEST-JPN4.png")

img2 = img.copy()

img3 = img.copy()

# グレースケール

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

gray_list = np.array(gray)

# データプロットライブラリに貼り付け

#plt.imshow(gray_list)

#cv2.imwrite("calendar_mod.png", gray)

## 反転 ネガポジ変換

gray2 = cv2.bitwise_not(gray)

gray2_list = np.array(gray2)

#plt.imshow(gray2_list)

#cv2.imwrite("calendar_mod2.png", gray2)

lines = cv2.HoughLinesP(gray2, rho=1, theta=np.pi/360, threshold=80, minLineLength=80, maxLineGap=5)

for line in lines:

x1, y1, x2, y2 = line[0]

# 赤線を引く

red_lines_img = cv2.line(img2, (x1,y1), (x2,y2), (0,0,255), 3)

red_lines_np=np.array( red_lines_img)

#cv2.imwrite("calendar_mod3.png", red_lines_img)

# 線を消す(白で線を引く)

no_lines_img = cv2.line(img3, (x1,y1), (x2,y2), (255,255,255), 3)

no_lines=np.array( no_lines_img)

plt.imshow(no_lines)

#plt.show()

#cv2.imwrite("calendar_mod4.png", no_lines_img)

print('OCR start...')

#txt = pytesseract.image_to_string(img, lang="jpn",config='osd --psm 6')

txt = pytesseract.image_to_string(no_lines_img, lang="jpn",config='--psm 6')

plt.show()

#cv2.imwrite("/home/mars/line_erased.png",no_lines_img)

print('---------OCR:results-----')

print(txt)

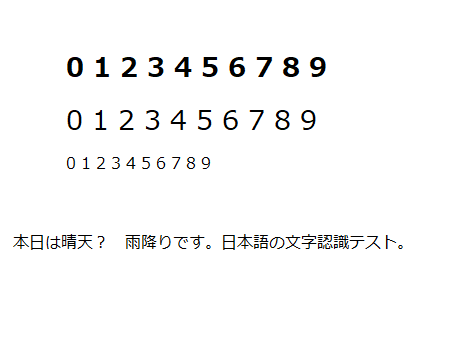

print('---------OCR done.---------') 線が消された画像

認識結果。問題なく認識。ただし、以上は理想的(?)にきれいな画像の場合。カメラで撮影した画像については、さらに検証や処理の追加が必要になるかも??

---------OCR:results-----

0123456789

0123456789

0123456789

本 日 は 晴天 ? 雨降り で す 。 日 本 語 の 文字 認識 テス ト 。

---------OCR done.-------